Logistic Regression with Scikit-learn

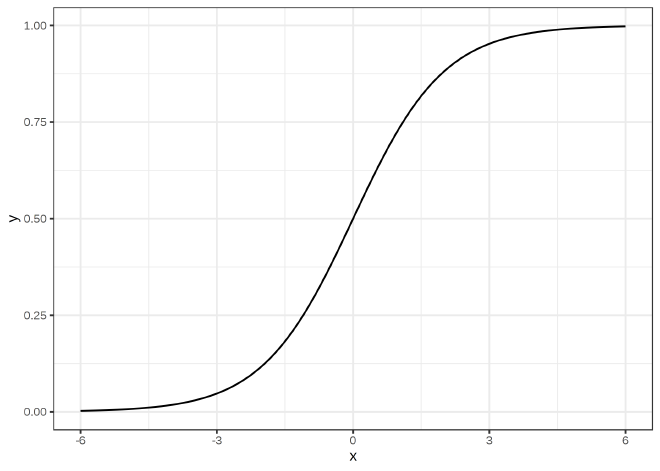

Logistic regression is a type of supervised machine learning algorithm used to predict the probability of a target variable that has binary or categorical outcomes.

Below is a simple example to demonstrate the process of running a logistic regression using Python alongside the NumPy, Pandas, and Scikit-learn packages.

In practice, you will need to perform additional data cleaning, preprocessing, and feature engineering steps to prepare your data for modeling.

Import the necessary libraries. #

import numpy as np

import pandas as pd

from sklearn.linear_model import LogisticRegression

Load your data. #

# In this example, we will use the iris dataset that comes with sklearn.

from sklearn.datasets import load_iris

iris = load_iris()

X = pd.DataFrame(iris.data, columns=iris.feature_names)

y = pd.DataFrame(iris.target, columns=['target'])

Split the data into training & testing sets. #

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=0)

Train the model. #

logreg = LogisticRegression()

logreg.fit(X_train, y_train.values.ravel())

Predict using the model. #

y_pred = logreg.predict(X_test)

Evaluate the model. #

from sklearn.metrics import accuracy_score

print('Accuracy score:', accuracy_score(y_test, y_pred))

Note: One of the most common mistakes when using a logistic regression is overfitting or underfitting the data. Overfitting can occur when the model fits the training data too closely. Underfitting can occur when the model is too simple and cannot capture the nuances between your variables.