K-Means Clustering with Scikit-learn

2 mins

K-Means clustering is a popular unsupervised learning algorithm that is used to partition a dataset into K distinct clusters. I use K-Means clustering to segment loyalty members based on their purchase history. See my post on customer segmentation to learn more about this.

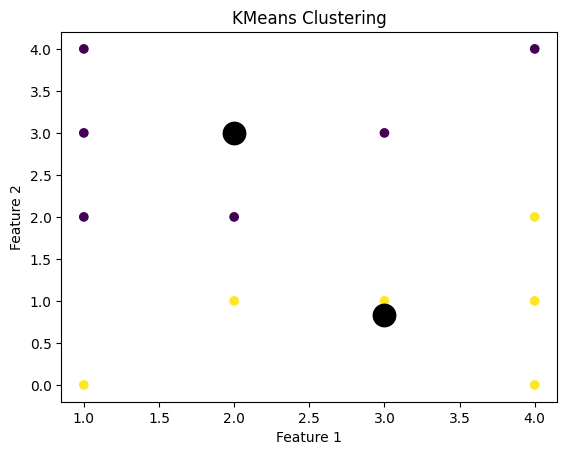

Below is an example demonstrating the basic process of running K-Means clustering using Python alongside the NumPy, matplotlib, and scikit-learn packages.

In practice, you will need to perform additional data cleaning, preprocessing, and feature engineering steps to prepare your data for modeling.

Import the necessary libraries. #

import numpy as np

import matplotlib.pyplot as plt

from sklearn.cluster import KMeans

Generate some example data. #

X = np.array([[1, 2], [1, 4], [1, 0], [4, 2], [4, 4], [4, 0], [1, 3], [4, 1], [3, 1], [2, 2],[3, 3], [2, 1]])

Create a 2 cluster k-means. ** #

kmeans = KMeans(n_clusters=2)

Fit the model to the data. #

kmeans.fit(X)

Get the predicted labels and cluster centers. #

labels = kmeans.predict(X)

centers = kmeans.cluster_centers_

Plot the data points for each cluster. #

plt.scatter(X[:, 0], X[:, 1], c=labels)

Plot the cluster centers(black), labels, and plot. #

plt.scatter(centers[:, 0], centers[:, 1], marker='o', s=200, linewidths=3, color='black', zorder=10)

plt.xlabel('Feature 1')

plt.ylabel('Feature 2')

plt.title('K-Means Clustering')

plt.show()

Notes #

**In this example, we are using 2 clusters. In practice, you will be picking larger cluster counts. See my other post on how to use the elbow method to determine the optimal cluster count.