Binary Classification - Confusion Matrix

Binary Classification Models #

Binary classification is a type of supervised machine learning algorithm used to categorize observations into one of two classes (0 or 1). The goal is to use the model to predict the class of new observations based on some input features.

Two real world examples include: predicting whether a credit card transaction is fraudulent or determining if an e-mail is spam.

The most popular methods used for binary classification include logistic regression, decision trees, random forests, support vector machines, and neural networks. Each classifier has its own pros and cons; each better suited for particular use cases.

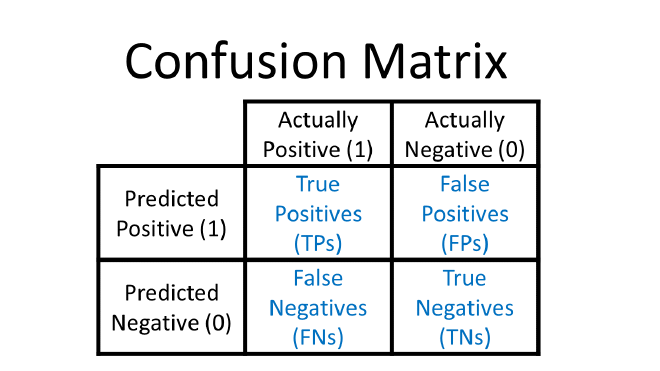

Confusion Matrix #

Regardless of which classifier you are using, a confusion matrix can be used to evaluate its performance.

A confusion matrix is broken into the following parameters (using a patient’s health as an example):

- True Positive (TP): The patient is sick, and the model predicts the patient to be sick.

- False Positive (FP): The patient is healthy, but the model wrongly predicts the patient to be sick.

- True Negative (TN): The patient is healthy, and the model correctly predicts the patient to be healthy.

- False Negative (FN): The patient is sick, but the model wrongly predicts the patient to be healthy.

After computing these four matrix values, we can calculate the model’s accuracy, recall, precision, and F-Score using the following formulas:

Accuracy #

Accuracy tells us how many times our model has correctly classified an item with respect to the total.

Recall (aka Sensitivity) #

Recall is also known as Sensitivity because as recall increases, the model becomes less and less accurate and begins to classify negative classes as positive.

Precision (aka Specificity) #

Precision is the accuracy calculated only for positive classes. It is also called specificity because it defines how sensitive the model is when there is the signal to be recognized. So it tells us how often we correctly classify an item when we classify it as positive.

Trade Offs Between Recall and Precision #

Both recall and precision range from values of 0 to 1. As a rule of thumb, the closer to 1, the “better” the model is. But unfortunately you can not have the best of both worlds - improving one comes as the expense of the other. Therefore:

A high precision model is conservative. Meaning, it may not recognize the class correctly, but when it does, we can be well assured that the answer is correct.

A high recall model is liberal. Meaning it may recognize a class much more often, but in doing so it tends to include a lot more of false positives.

F-Score #

The F-Score or F1 score combines precision and recall into one metrics to make it easier to evaluate. This is the harmonic mean of precision and recall and is one of the most common metrics for evaluating a binary classification model.

An increasing F1 score suggests the model has increased its performance for accuracy, recall, or both.